When prime minister Rishi Sunak met US president Joe Biden on June 8, one of the overriding themes of their talks was how the world’s leading governments should guard against risks posed by artificial intelligence (AI).

Ultimately, Sunak wants an international organisation established, based in London, to effectively be an AI watchdog. He also has plans for the capital to host a conference on the issue later this year.

It would appear that the ability and use of AI has reached a tipping point and people are worried about it.

In May, a group of industry leaders - including Sam Altman, chief executive of OpenAI; Demis Hassabis, chief executive of Google DeepMind and Dario Amodei, chief executive of Anthropic (three of the leading AI companies) - signed an open letter warning that the “risk of extinction from AI should be a global priority, alongside other societal-scale risks, such as pandemics and nuclear war.”

In less apocalyptic terms, Microsoft published a set of guidelines of how companies should use AI. And in a similar vein Meta (the parent company of Facebook) and Twitter have both stressed the need for better regulation of AI.

In all the discussions around AI, one issue has been notable by its absence - racism.

I work for the Sir Lenny Henry Centre for Media Diversity, based at Birmingham City University and I focus on issues such as racism, sexism and ableism in journalism and across the media industry.

The Sir Lenny Henry Centre for Media Diversity believes that AI poses an existential threat to diversity in the media industry. According to a survey by the World Association of News Publishers, currently half of all newsrooms use AI tools. These tools, such as ChatGPT, can help journalists source experts to even writing entire pieces. (There are even examples of ChatGPT successfully writing an entire master’s thesis).

The problem is many of these AI programs are inherently biased. Let me explain with two simple examples.

Last Tuesday (13), the Sir Lenny Henry Centre for Media Diversity tested one of the most popular AI programs used by journalists, Bing, and asked it: “What are the important events in the life of Winston Churchill?”

Bing failed to mention his role in the Bengal famine and his controversial views on race.

Three days prior, on June 10, the Sir Lenny Henry Centre for Media Diversity asked Chat GPT (another popular program used in newsrooms); “Who are the twenty most important actors of the 20th century?”

ChatGPT did not name a single actor of colour.

That means that if a journalist were to rely on these AI programs to help them write piece on Winston Churchill or Hollywood actors, they would be excluding facts and figures that are disproportionately seen as important to black and Asian people.

Whether you agree or disagree with whether the Bengal famine should be mentioned in an account of Churchill’s life, or that all of the important Hollywood actors in the past century were white.

What the answers demonstrate is that ChatGPT “views” the world through a certain prism.

In many ways, we should not be surprised by this. The algorithms of tools Generative AI rely on processing large quantities of existing source materials. It is commonly acknowledged that existing British journalism suffers from a diversity problem with an over-representation of white men.

For example, in 2020, Women in Journalism published research showing that in one week in July 2020 - at the height of the Black Lives Matter protests across the world – the UK’s 11 biggest newspapers failed to feature a single byline by black journalist on their front pages. Taking non-white journalists as a whole, of the 174 bylines examined, only four were credited to journalists of colour.

The same report also found that in the same week just one in four front-page bylines across the 11 papers went to women.

Importantly, the week the study surveyed, the biggest news stories were about Covid-19, Black Lives Matter, the replacement of the toppled statue of the slave trader Edward Colston in Bristol and the appeal over the British citizenship of the Muslim mother, Shamima Begum.

This means that assuming the algorithms of Generative AI programs draw on the stories written by journalists in mainstream newspapers to generate its information, if a journalist were to ask it any questions about the issues in the news that week, they will overwhelmingly be receiving information from a white, male perspective.

The end result is that Generative AI programs, if used inappropriately, will only serve to reinforce and amplify the current and historical diversity imbalances in the journalism industry, effectively building bias on top of bias.

That is why last week the Sir Lenny Henry Centre produced a set of guidelines that it hopes all journalists and newsrooms will adopt.

These guidelines include:

1. Journalists should be aware of built-in bias.

2. Newsrooms are transparent to their readers when they use AI.

3. Journalists actively building in diversity into the questions they ask the AI programs.

4. Journalists should report mistakes and biases they spot (we’ve reported the biases we’ve highlighted).

5. Newsrooms should never treat the answers provided by AI programs as definitive facts.

While the centre has focused on how to address racism and bias on the use of AI in the media industry, we hope it also serves as a clarion call for all discussions around the use and threats of AI to make sure issues of discrimination and historically marginalised groups are considered.

If Sunak really wants the UK to be a world leader in safeguarding against the risks of AI, recognising the importance of tackling racism should be at the heart of any and all of his plans.

(The full set of guidelines and the work of the Sir Lenny Henry Centre for Media Diversity are available here)

Marcus Ryder is the head of external consultancies at the Sir Lenny Henry Centre for Media Diversity. A former BBC news executive, he is also the author of Black British Lives Matter

Shashi Tharoor

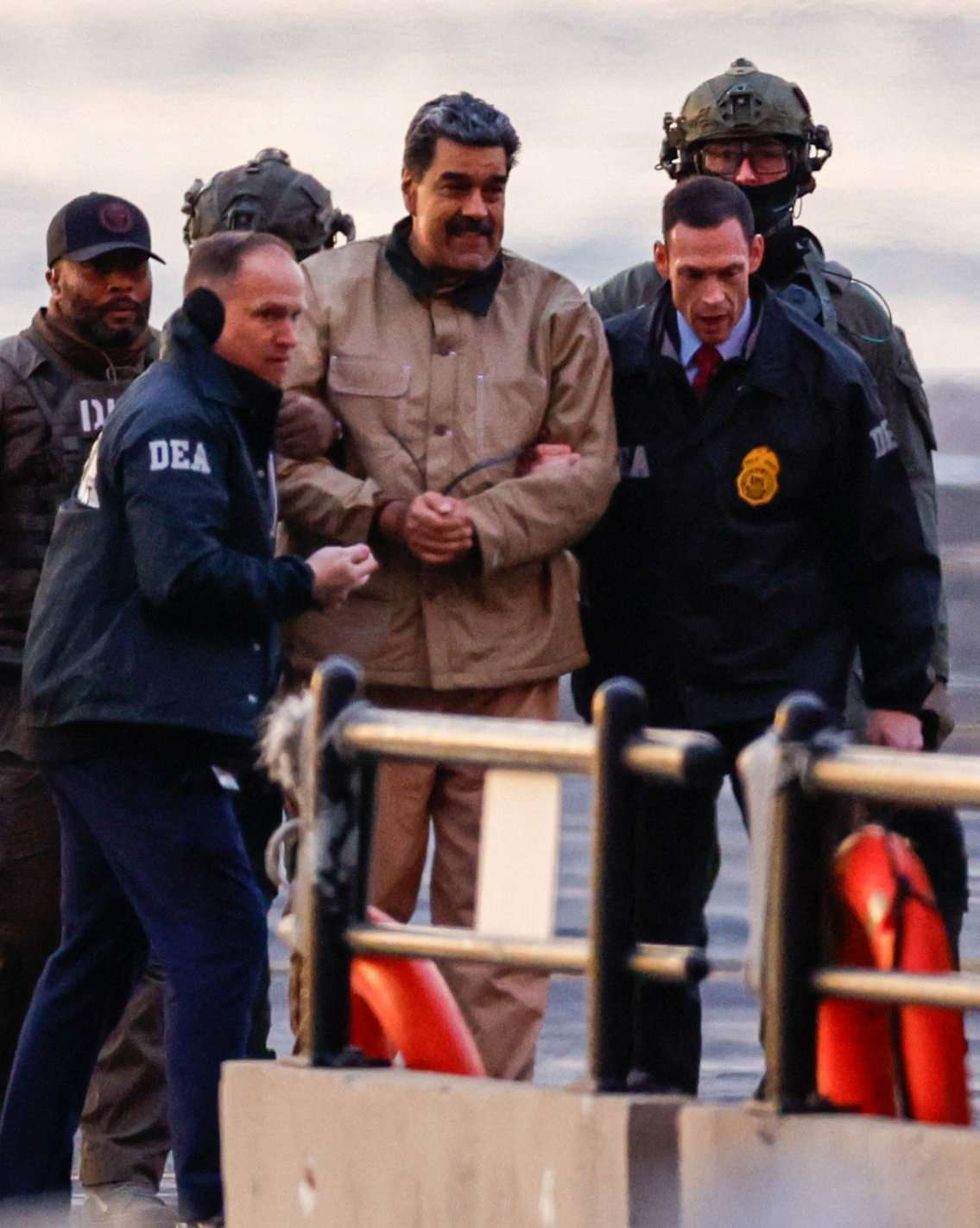

Shashi Tharoor Nicolás Maduro arriving at the Down town Manhattan Heliport.

Nicolás Maduro arriving at the Down town Manhattan Heliport.